PHD2024-13

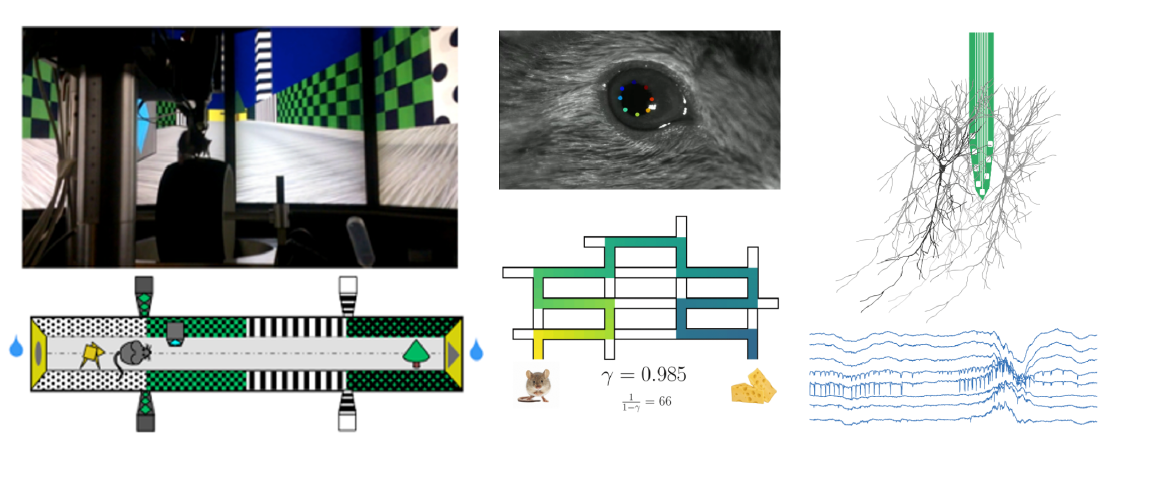

A place with a view: eye movements and hippocampal codes for navigating complex virtual environments

Host laboratory and collaborators

Jérôme Epsztein, Inmed jerome.EPSZTEIN@univ-amu.fr

Lorenzo Fontolan, Inmed/CPT lorenzo.FONTOLAN@univ-amu.fr

Abstract

Wayfinding is essential to our daily life. To survive, animals need to flexibly navigate their environment to find food or avoid predation. This ability relies in part on an internal cognitive map. The activity of spatially modulated neurons (called place cells) has been well characterized in the rodent hippocampus, an essential structure for spatial navigation. However, while vision is key for spatial exploration in humans, its role in rodents is understudied. First, we will use virtual reality (VR) for rodents coupled with eye tracking and large-scale neuronal recordings to determine the role of visual inputs in rodents’ spatial navigation. Then, we will use advanced analysis methods to infer spatial representations, internal states, and navigational strategies. Lastly, to elucidate the mechanisms underlying goaldirected navigation, we will compare our data with a reinforcement learning model trained to perform the same VR task, in which an artificial agent acts on the environment to achieve certain goals by learning the actions that maximize future rewards.

Keywords

Virtual reality, hippocampus, mice, successor representation, reinforcement learning, place cells, spatial coding, cognitive strategies

Objectives

Aim 1:

- Design a navigation task for head fixed mice in a complex semi-realistic virtual environment.

- Use video oculography of both eyes to track eye movements during the VR navigation task in mice.

- Record and analyze neuronal activity in the rodents’ hippocampus and upstream visual areas during

the navigation task.

Aim 2: Develop a reinforcement learning framework to model and predict the recorded spatial

representations and the rodents’ navigational behavior via training of an artificial agent.

Proposed approach (experimental / theoretical / computational)

Experimental: We will develop a new virtual reality system for mice allowing 2D virtual navigation and a new navigation task in a large scale 2D semi realistic environment (aim 1a). We will assess navigation performance and focus of attention via measures of visual exploration and pupil diameter through oculography (aim 1b). We will use large-scale electrophysiological recordings (neuropixel and linear silicon probes) in the hippocampus and upstream cortical areas (aim 1c).

Computational/theoretical: We will devise a reinforcement learning framework to model the task (aim 2). This approach will be used to train an artificial neural network receiving sensory inputs from the same VR environment. In a second step we will train recurrent neural networks to reproduce neural activity during the execution of the task and reverse-engineer the network to reveal which mechanisms are enabled by hippocampal cells.

Interdisciplinarity

The project will combine the expertise of the Epsztein lab (Partner #1) in using virtual reality (VR) for rodents combined with eye tracking and large-scale electrophysiology to study spatial navigation with the expertise of the Fontolan lab (Partner #2) in training artificial neural networks and reinforcement learning. The development of the virtual reality system for 2D spatial navigation combining a treadmill on a rotating platform and pressure sensors to allow animals to change their orientation in VR will benefit from CENTURI multi engineering platform. On the computational side we will train an artificial neural network receiving visual information from the same VR environment to perform the navigation task. We will further train a recurrent neural network to reproduce hippocampal neural activity recorded during execution of the task, an approach pioneered by the Fontolan lab. This will lead to new insights on which mechanisms enabled by hippocampal cells are important for successful navigation.

Expected profile

The recruited PhD student will have a biology (with substantial quantitative skills) or physics/engineering/mathematical background with a keen interest in spatial navigation and computational neuroscience. Familiarity and ease with a modern programming language (Matlab or python) is strongly recommended.

Is this project the continuation of an existing project or an entirely new one? In the case of an existing project, please explain the links between the two projects

It is a new project.

2 to 5 references related to the project

- Attractor dynamics gate cortical information flow during decision-making. Finkelstein A, Fontolan L,

Economo MN, et al. Nat Neurosci 24, 843–850 (2021). https://doi.org/10.1038/s41593-021-00840-6 - Dynamic control of hippocampal spatial coding resolution by local visual cues. Bourboulou R, Marti G,

Michon FX, El Feghaly E, Nouguier M, Robbe D, Koenig J, Epsztein J. Elife. 2019 Mar 1;8:e44487. doi:

10.7554/eLife.44487. - Eye movements reveal spatiotemporal dynamics of visually-informed planning in navigation.

Zhu S, Lakshminarasimhan KJ, Arfaei N, Angelaki DE. Elife. 2022 May 3;11:e73097. doi:

10.7554/eLife.73097. - Predictive maps in rats and humans for spatial navigation. de Cothi W, Nyberg N, Griesbauer EM,

Ghanamé C, Zisch F, Lefort JM, Fletcher L, Newton C, Renaudineau S, Bendor D, Grieves R, Duvelle É,

Barry C, Spiers HJ. Curr Biol. 2022 Sep 12;32(17):3676-3689.e5. doi: 10.1016/j.cub.2022.06.090. - The cognitive architecture of spatial navigation: hippocampal and striatal contributions. Chersi, F., &

Burgess, N. (2015). Neuron, 88(1), 64-77.

Two main publications from each PI over the last 5 years

- Discrete attractor dynamics underlies persistent activity in the frontal cortex. Inagaki, H. K., Fontolan,

L., Romani, S., & Svoboda, K. (2019). Nature, 566(7743), 212-217. - Attractor dynamics gate cortical information flow during decision-making. Finkelstein A*, Fontolan L*,

Economo MN, et al. Nat Neurosci 24, 843–850 (2021). https://doi.org/10.1038/s41593-021-00840-6 - Dynamic control of hippocampal spatial coding resolution by local visual cues. Bourboulou R, Marti G,

Michon FX, El Feghaly E, Nouguier M, Robbe D, Koenig J, Epsztein J. Elife. 2019 Mar 1;8:e44487. doi:10.7554/eLife.44487. - Kv1.1 contributes to a rapid homeostatic plasticity of intrinsic excitability in CA1 pyramidal neurons in

vivo. Morgan PJ, Bourboulou R, Filippi C, Koenig-Gambini J, Epsztein J. Elife. 2019 Nov 27;8:e49915. doi:

10.7554/eLife.4991

Project's illustrating image